May 10, 2006

Motiva

Philips has announced the launch of the remote monitoring system Motiva for the U.S. market (press release). The system is an interactive healthcare platform which uses broadband television, along with home vital sign measurement devices, to connect patients to their healthcare providers and medical support system.

Motiva was the winner of a Medical Design Excellence Award and named one of the "Top 5 Disease Management Ideas of 2005"

22:00 Posted in Pervasive computing | Permalink | Comments (0) | Tags: Positive Technology

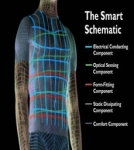

Smartshirt

The company Sensatex is looking for beta testers for its SmartShirt System, a wearable physiological information management platform based on technology developed at the Georgia Institute of Technology.

From the website:

the SmartShirt is made using any type of fiber. It is woven or knitted incorporating a patented conductive fiber/sensor system designed specifically for the intended biometric information requirements. Heart rate, respiration, and body temperature are all calibrated and relayed in real time for analysis.

According to Sensatex, the shirt can be used to remotely monitor home-based patients, first responders, hazardous materials workers and soldiers. It also can be used to monitor signs of fatigue in truck drivers and to support athletic training.

21:53 Posted in Wearable & mobile | Permalink | Comments (0) | Tags: Positive Technology

May 09, 2006

Promoting Innovation in Electromyography

The competition is open to everyone with an interest in the field of Electromyography. Participants must summarize their innovative work to win a complete Bagnoli 8-Channel EMG System, EMGworks Signal Acquisition and Analysis Software and a National Instruments Data Acquisition card for desktop computers. The Prize is valued at $11,500 USD.

The deadline for submitting entries is September 29, 2006.

The winner will be selected by a 5-member panel consisting of experts from scientific, engineering, and medical disciplines.

Past winners of the prize include: Ms. Kim Sherman of Sandalwood, Inc; Dr. F.C.T. van der Helm of Delft University of Technology, the Netherlands; and Dr. A.L. Hof of University of Groningen, the Netherlands.

To learn more about the prize, go to the company's website

19:49 Posted in Research institutions & funding opportunities | Permalink | Comments (0) | Tags: Positive Technology

May 07, 2006

Videogame Duke Nukem used to investigate how sleep affects long-term memory

Results showed that sleep-deprived gamers recalled information from a different part of the brain to those who slept.

Writing in Proceedings of the National Academy of Sciences (PNAS), the team said its work also shed light on how we navigate in the real world.

See the full story

14:00 Posted in Serious games | Permalink | Comments (0) | Tags: Positive Technology

Living with three arms

Via VRoot

(From Virtual Worldlets)

Virtual Reality is just beginning to head down the full body sensation reproduction path. We are at the very early stages of being able to recreate parts of the physical form, entirely in the virtual. This is a concept which is likely to have very a profound effect upon how we deal with the world around us.

At the moment, VR technology in reproducing virtual limbs is limited to a single limb at a time. This is done by mimicking the movements of the user's physical limb by reading the signals sent down the brainstem, translating and implementing them, as opposed to monitoring the movement of the arm and duplicating that. This is both faster than mimicking the movement observationally, and also allows the technique to work if the user has no physical arm to mimic, so long as the brain is capable of controlling one - e.g., quadriplegics, limb amputees.

13:51 Posted in Virtual worlds | Permalink | Comments (0) | Tags: Positive Technology

HCI Research Assistant - London

Via Usability News

Deadine: 15 May 2006

We currently have 17 employees, and are continuing to grow steadily. We are now looking for a Research Assistant to join our friendly and enthusiastic team. This is a great opportunity for a future usability / UCD consultant to gain the experience they need while playing a very important role in a successful company.

The responsibilities for this role include:

- Providing support to consultants during user research – this will mainly consist of viewing usability tests remotely and taking notes in a fieldsheet template about the users behaviour, including marking issues and responses to particular questions of interest.

- Assisting with analysis – following testing, helping the consultant to process the information gathered during user research. This may involve completing a findings log with observed issues, holding an analysis workshop with the consultant, or processing results from the user research e.g. card sorting analysis.

- Set-up of technical equipment – helping the consultant to get ready for the research. This may involve setting up rooms and equipment for testing or configuring our recording software.

- Video editing – identifying suitable illustrative video clips with the consultant and making the clips. May also involve making full-session recordings for the client.

- Supporting the research process in other ways as required – may include helping with the user recruitment process, for example contacting users to arrange research sessions; and any small tasks required to help the consultant on the project e.g. proof reading, printing and binding reports, burning CDs etc.

You need to have:

- UCD/HCI knowledge – you need to have a good understanding of the HCI field of knowledge, preferably with a formal qualification in this area such as an MSc. Perhaps you are a recent grad looking to gain experience in the world of usability.

- Note-taking abilities – you need to be able to take notes rapidly using a computer (good typing speed essential) + be confident using Word and Excel.

- Strong analytical skills to help develop insights out of the data gathered.

- Methodological skills are an advantage e.g. experience of performing usability tests, expert walkthroughs, card sorting etc

- Interpersonal skills - you need to be able to communicate in a clear and confident professional manner. This is not primarily a client-facing role, but you may come into contact with clients while viewing, and you need to behave appropriately at all times.

- Technical competence – you are broadly confident using technology, and willing to learn about equipment configurations and software used in user research. Experience with TechSmith Morae a big plus.

- Can-do spirit - we are a smallish company and you need to work with the team – to be willing to pitch in to help with anything and to contribute positively to the culture.

- Accountability – you care about your work being brilliant quality, delivered on time and useful for the consultant, and end client.

- Ambition – our aim with this role is to help you develop, and over time we will give you more responsibilities and expose you to more of the project cycle.

- Passion – You are genuinely excited at the prospect of UCD principles being applied to everything in the world, for evermore.

What we can give you

- Great experience in a leading UCD consultancy

- An opportunity to progress to a Consultant position with time and support

- Brilliant people to work with

- A chance to work with the best client list on the most interesting projects in the business

- Flexibility to pursue your own HCI interests and build your name in the community.

Please send your CV and why you think you are right for this position to Pippa Gawley at pippa@amber-light.co.uk, as soon as possible and by Monday 15th May at the latest. Please note that we can only consider people who are already eligible to work in the UK for this position. No agencies please.

13:45 Posted in Research institutions & funding opportunities | Permalink | Comments (0) | Tags: Positive Technology

Kung-fu computer game delivers real kicks

New Scientist reports about a new Kung-fu computer game that allows the user to deliver real kicks:

Kick Ass Kung Fu lets players fight onscreen enemies using real kicks, punches, head-butts or by wielding any improvised weapon they choose. A video camera captures their movements from one side and superimposes a two-dimensional silhouette of them onto a computer screen. A computer then translates the silhouette's moves into real-time computerised kicks and punches, enabling a player to take on virtual opponents.

13:45 Posted in Serious games | Permalink | Comments (0) | Tags: Positive Technology

Existing Technologies Combine to Make Automated Home

Via Smart Mobs

The National Institute of Advanced Industrial Science and Technology (Japan), Ymatic Ltd., and Biometrica Systems Asia Co. Ltd. have jointly developed a novel automated home. The home combines robots guided by IC tags, a biometric face authentication system, and a wireless network.

The National Institute of Advanced Industrial Science and Technology (Japan), Ymatic Ltd., and Biometrica Systems Asia Co. Ltd. have jointly developed a novel automated home. The home combines robots guided by IC tags, a biometric face authentication system, and a wireless network.

Source: Physorg

13:42 Posted in Pervasive computing | Permalink | Comments (0) | Tags: Positive Technology

Motorola Cell Phone Automatically Adjusts to the Elderly

Via Textually.org

Motorola has patented a new phone technology adaptable for the hearing impaired.

"By automatically detecting speech patterns of the elderly, the technology automatically boosts incoming and outgoing audio while simplifying menu structure and increasing font size.

The special needs of the elderly can often be in conflict with other users, such as the youth which often desire the smallest, most feature rich devices possible. Hence, the technology allows one device to adapt and accommodate to both market segments."

13:35 Posted in Wearable & mobile | Permalink | Comments (0) | Tags: Positive Technology

Virtual reality immersion method of distraction to control experimental ischemic pain

Virtual reality immersion method of distraction to control experimental ischemic pain.

Isr Med Assoc J. 2006 Apr;8(4):261-5

Authors: Magora F, Cohen S, Shochina M, Dayan E

BACKGROUND: Virtual reality immersion has been advocated as a new effective adjunct to drugs for pain control. The attenuation of pain perception and unpleasantness has been attributed to the patient's attention being diverted from the real, external environment through immersion in a virtual environment transmitted by an interactive 3-D software computer program via a VR helmet. OBJECTIVES: To investigate whether VR immersion can extend the amount of time subjects can tolerate ischemic tourniquet pain. METHODS: The study group comprised 20 healthy adult volunteers. The pain was induced by an inflated blood pressure cuff during two separate, counterbalanced, randomized experimental conditions for each subject: one with VR and the control without VR exposure. The VR equipment consisted of a standard computer, a lightweight helmet and an interactive software game. RESULTS: Tolerance time to ischemia was significantly longer for VR conditions than for those without (P < 0.001). Visual Analogue Scale (0-10) ratings were recorded for pain intensity, pain unpleasantness, and the time spent thinking about pain. Affective distress ratings of unpleasantness and of time spent thinking about pain were significantly lower during VR as compared with the control condition (P< 0.003 and 0.001 respectively). CONCLUSIONS: The VR method in pain control was shown to be beneficial. The relatively inexpensive equipment will facilitate the use of VR immersion in clinical situations. Future research is necessary to establish the optimal selection of clinical patients appropriate for VR pain therapy and the type of software required according to age, gender, personality, and cultural factors.

13:33 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology

Is there "feedback" during visual imagery?

Is there "feedback" during visual imagery? Evidence from a specificity of practice paradigm.

Can J Exp Psychol. 2006 Mar;60(1):24-32

Authors: Krigolson O, Van Gyn G, Tremblay L, Heath M

13:31 Posted in Mental practice & mental simulation | Permalink | Comments (0) | Tags: Positive Technology

Cognitive Computing

Nicholas Nova quotes an interview of Dharmendra Modha, chair of the Almaden Institute at IBM’s San Jose and IBM’s leader for cognitive computing, who claims a language shift from the previsouly so-called “Artifical Intelligence” to “Cognitive Computing”:

Q: Why use the term “cognitive computing” rather than the better-known “artificial intelligence”?

A: The rough idea is to use the brain as a metaphor for the computer. The mind is a collection of cognitive processes—perception, language, memory, and eventually intelligence and consciousness. The mind arises from the brain. The brain is a machine—it’s biological hardware.

Cognitive computing is less about engineering the mind than it is the reverse engineering of the brain. We’d like to get close to the algorithm that the human brain [itself has]. If a program is not biologically feasible, it’s not consistent with the brain.

13:26 Posted in AI & robotics | Permalink | Comments (0) | Tags: Positive Technology

Mobile Processing Workshop: Interactive Applications for Mobile Phones

Lisbon, Portugal, 15 - 19 May 06, Espaço Atmosferas, Rua da Boavista, 67, Lisbon.

From the conference's website

The mobile phone has reached a level of adoption that far exceeds that of the personal computer. As a result, they are an emerging platform for new services and applications that have the potential to change the way we live and communicate.

Mobile Processing is an open source project that aims to drive this innovation by increasing the audience of potential designers and developers through a free, open source prototyping tool based on Processing and the open sharing of ideas and information. This workshop will introduce the Mobile Processing project and prototyping tool and provide hands-on instruction and experience with programming custom applications for the mobile phone.

Contents:

- Introduction to Mobile Processing, phone hardware and development platforms.

- Survey of projects with the phone as both the platform and subject for new forms of interactive applications and electronic art.

- Basic programming and prototyping concepts with 2D graphics and animation.

- Phone input/output handling including keyboard, camera, sound and vibration.

- Internet networking. Parsing and generating XML-formatted data.

- Text messaging and Bluetooth networking.

The workshop will be practical and at the end every participant will develop a personal exercise.

Equipment

Windows highly recommended, but Mac OS X is acceptable, with built-in or USB Bluetooth adapters recommended. Mobile phones with support for Java and Bluetooth recommended.

Participants are encouraged to bring their laptops.

Schedule

20 hours: 5 sessions X 4 h

15 to 19 of May 06 - 18h-22h

Target

Basic programming skills, familiarity with Processing recommended but not required.

13:16 Posted in Wearable & mobile | Permalink | Comments (0) | Tags: Positive Technology

Robotic Action Painter

RAP (Robotic Action Painter) is a new generation of painting robots designed for Museum or long exhibition displays. It is completely autonomous and need very little assistance and maintenance. RAP creates it's own paintings based on an artificial intelligence algorithm, it decides when the work is ready and signs in the right bottom corner with its distinctive signature. The algorithm combines initial randomness, positive feedback and a positive/negative increment of 'color as pheromone' mechanism based on a grid of nine RGB sensors. Also the 'sense of rightness' - to determine when the painting is ready - is achieved not by any linear method, time or sum, but through a kind of pattern recognition system.

RAP (Robotic Action Painter) is a new generation of painting robots designed for Museum or long exhibition displays. It is completely autonomous and need very little assistance and maintenance. RAP creates it's own paintings based on an artificial intelligence algorithm, it decides when the work is ready and signs in the right bottom corner with its distinctive signature. The algorithm combines initial randomness, positive feedback and a positive/negative increment of 'color as pheromone' mechanism based on a grid of nine RGB sensors. Also the 'sense of rightness' - to determine when the painting is ready - is achieved not by any linear method, time or sum, but through a kind of pattern recognition system.

13:10 Posted in Cyberart | Permalink | Comments (0) | Tags: Positive Technology

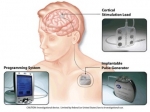

New implantable device system for stroke rehabilitation

Via Medgadget

The US company Northstar Neuroscience is developing an implantable device system intended to enhance neuroplasticity and recovery of function in patients status post stroke.

From the company's website:

Following a stroke, the only proven treatment currently available for patients with hemiparesis (motor deficits) or aphasia (speech deficits) is rehabilitative therapy. Unfortunately, many patients do not achieve satisfactory functional improvement from rehabilitative therapy. There is considerable evidence that the brain can undergo significant repair and recovery after an injury such as a stroke by a process termed neuroplasticity.As reported in numerous scientific publications, neuroplasticity involves the recruitment of existing alternative neural pathways and the development of new synaptic connections, i.e., reorganization of the brain circuitry. Within hours after a stroke, the brain will begin to recruit existing alternative neural pathways in an attempt to meet functional demands. Over time and with use, there is an increase in the density of connections between the neurons that comprise these neural pathways. However, natural gains in motor recovery and speech generally plateau within several months after a stroke, with many stroke survivors achieving only minimal recovery of function...

Together, we have demonstrated that cortical stimulation of the healthy brain tissue adjacent to the "stroke," in combination with rehabilitation, enhances motor recovery and suggests that cortical stimulation for stroke patients may facilitate neuroplasticity...

The Northstar Stroke Recovery System is comprised of the following components:

-- Implantable pulse generator (IPG) - an electrical stimulator that is implanted in the pectoral (upper chest) area.

-- Cortical stimulation lead - an electrode connected to the IPG, which is used to deliver stimulation to the cortex. The electrode is placed on top of the dura, which is the membrane that covers the brain's surface.

-- Programming system - a handheld computer attached to a programming device which allows communication with the implanted IPG device. This system allows the clinician to turn the device on/off and to set/modify stimulation parameters

13:03 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology

May 06, 2006

New personal video display device

Via Emerging Technology Trends

The new personal video display device developed by the Israeli company Mirage Innovations Ltd looks like a simple pair of glasses. According to the company, the technology

"is based on the principle of transforming a thin transparent plate into a complete wearable personal display system. The diffractive planar optics is combined with a microdisplay source, such as micro LCD, LCOS or OLED"

One of the most interesting feature of the new display is that it eliminates the dizziness phenomenon usually associated with this kind of display. The product is not currently on the market.

19:05 Posted in Virtual worlds | Permalink | Comments (0) | Tags: Positive Technology

LA Immersive Technologies Enterprise

Via the Presence Mailing List

(From The Acadiana)

Virtual reality is commonly the stuff of science fiction — but the real-world applications made possible by using computer simulations inside a virtual reality environment are as tangible as diapers and oil wells.

The soon-to-open Louisiana Immersive Technologies Enterprise will make any number of research and business applications possible, said Carolina Cruz-Neira, the newly hired chief scientist at LITE.

Cruz-Neira gave an overview of LITE on Thursday at the TechSouth technology summit.

At the heart of LITE is a supercomputer and 6-sided (hexagonal) visual immersion cave. The supercomputer can process and simulate an enormous amount of data, then project that visual data onto the screens of the cave, which serves as a virtual reality environment that allows researchers to better understand that data...

Read the full article

18:58 Posted in Virtual worlds | Permalink | Comments (0) | Tags: Positive Technology

May 03, 2006

Warriors of the future will 'taste' battlefield

Via CNN

PENSACOLA, Florida (AP) -- In their quest to create the super warrior of the future, some military researchers aren't focusing on organs like muscles or hearts. They're looking at tongues.

By routing signals from helmet-mounted cameras, sonar and other equipment through the tongue to the brain, they hope to give elite soldiers superhuman senses similar to owls, snakes and fish.

Researchers at the Florida Institute for Human and Machine Cognition envision their work giving Army Rangers 360-degree unobstructed vision at night and allowing Navy SEALs to sense sonar in their heads while maintaining normal vision underwater -- turning sci-fi into reality.

The device, known as "Brain Port," was pioneered more than 30 years ago by Dr. Paul Bach-y-Rita, a University of Wisconsin neuroscientist. Bach-y-Rita began routing images from a camera through electrodes taped to people's backs and later discovered the tongue was a superior transmitter...

Continue to read the full article

14:58 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

May 02, 2006

Cardiac responses induced during thought-based control of a virtual environment

Cardiac responses induced during thought-based control of a virtual environment.

Int J Psychophysiol. 2006 Apr 25;

Authors: Pfurtscheller G, Leeb R, Slater M

Cardiac responses induced by motor imagery were investigated in 3 subjects in a series of experiments with a synchronous (cue-based) Brain-Computer Interface (BCI). The cue specified right hand vs. leg/foot motor imagery. After a number of BCI training sessions reaching a classification accuracy of at least 80%, the BCI experiments were carried out in an immersive virtual environment (VE), commonly referred as a "CAVE". In this VE, the subjects were able to move along a virtual street by motor imagery alone. The thought-based control of VE resulted in an acceleration of the heart rate in 2 subjects and a heart rate deceleration in the other subject. In control experiments in front of a PC, all 3 subjects displayed a significant heart rate deceleration of the order of about 3-5%. This heart rate decrease during motor imagery in a normal environment is similar to that observed during preparation for a voluntary movement. The heart rate acceleration in the VE is interpreted as effect of an increased mental effort to walk as far as possible in VE.

22:47 Posted in Brain-computer interface | Permalink | Comments (0) | Tags: Positive Technology

Steady-state somatosensory evoked potentials

Steady-state somatosensory evoked potentials: suitable brain signals for brain-computer interfaces?

IEEE Trans Neural Syst Rehabil Eng. 2006 Mar;14(1):30-7

Authors: Müller-Putz GR, Scherer R, Neuper C, Pfurtscheller G

One of the main issues in designing a brain-computer interface (BCI) is to find brain patterns, which could easily be detected. One of these pattern is the steady-state evoked potential (SSEP). SSEPs induced through the visual sense have already been used for brain-computer communication. In this work, a BCI system is introduced based on steady-state somatosensory evoked potentials (SSSEPs). Transducers have been used for the stimulation of both index fingers using tactile stimulation in the "resonance"-like frequency range of the somatosensory system. Four subjects participated in the experiments and were trained to modulate induced SSSEPs. Two of them learned to modify the patterns in order to set up a BCI with an accuracy of between 70% and 80%. Results presented in this work give evidence that it is possible to set up a BCI which is based on SSSEPs.

22:45 Posted in Brain-computer interface | Permalink | Comments (0) | Tags: Positive Technology